Dealing with Unlabeled Data by Semi-Supervised Learning

What’s Semi-Supervised Learning

It’s common to encounter that we don’t enough labeled data in real world, and human annotation is also time costed. Semi-supervised learning provides several ways to leverage our labeled data in different scenario and assumption.

2 Different Types of Learning From Unlabeled Data

Inductive learning: Unlabeled data is from training set data

Transductive Learning: Unlabeled data is from testing set data (production environment)

Generative Model

Generative model approach provides a way to learn from the labeled and unlabeled data by applying EM algorithm.

Use given labeled data to generate the prior probability of each class.

Assign unlabeled data with its posterior probability by selected generative model

Maximize the posterior probability of labeled and unlabeled data sampled from generative model by the EM algorithm. The loss would converge to its local minimum.

Low-Density Assumption

Assume that the decision boundary between the different classes is obvious and has low data density.

Self-Training

Step 1. Training the model on the labeled data.

Step 2. Compute the classification confidence of the unlabeled data.

Step 3. Select the unlabeled data which has top K confidence score and label it by the inference result.

Step 4. Repeat Step 1 ~ 3 until there’s no unlabeled data.

Note: The model like SVM the output is not represent for the likelihood which can use

calibrationtechnique to backtrack the estimated inference probability by the data with the ground truth label.

Smoothness Assumption

The input data distribution is uniform and the data points in the high-density region which are close is treat at the same class.

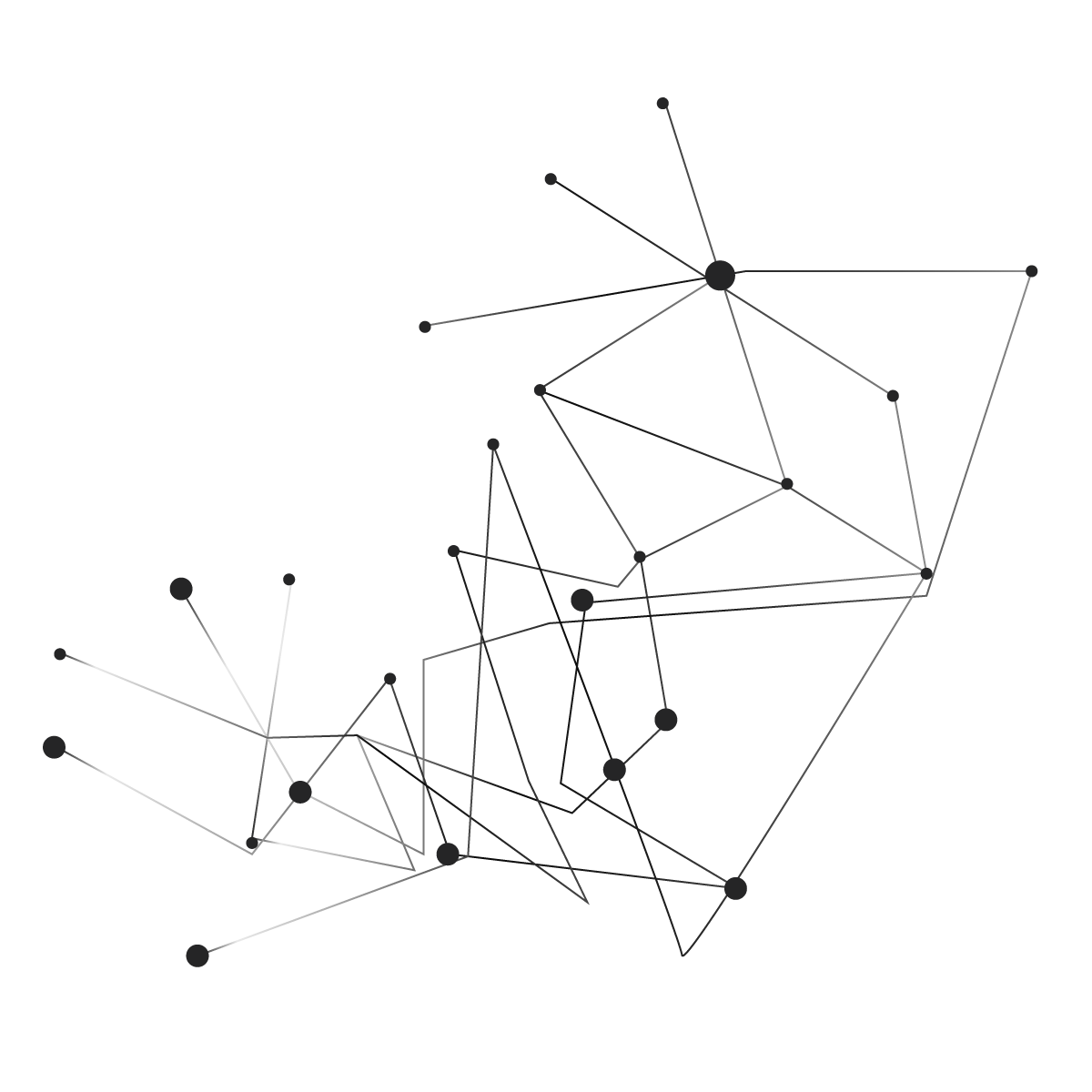

Graph-Based Approach

Use an encoder to extract the feature and the data with the similar property would stand close together. Label the few of the data in the cluster and link the remain unlabeled data by the label of its neighborhood.

Define the similarity. ex: KNN, RBF

Construct the graph by computing the similarity.

Label the unlabeled data by the most similar data point’s label close to it if the label is existed.

Repeat the label propagation process until the system reach the convergence tolerance which means there are enough unlabeled data has been labeled.

Scikit-Learn:

LabelPropagation

Note: Usually, the input features have to be transformed to the Standard Scaler in order to get better performance when applying the similarity (distance function) on the different dimensions of the feature.

Reference

[1] Hung-yi Lee - ML Lecture 12: Semi-supervised

[2] Learning with not Enough Data Part 1: Semi-Supervised Learning

$cd ~

$cd ~